GANPaint

Team | Visual AI Lab, IBM Research

Responsibilities | Design Lead — User Research, Content, UX Design, Visualization Design

Project Overview

GANPaint is an interactive experience demonstrating the capabilities and potential uses of generative adversarial networks, or GANs. It is based on research developed by the Visual AI Lab at IBM Research. I led a multi-disciplinary team to create a narrative and improved user experience for GANPaint, build out interaction patterns for a precursor experience to generative fill. As design lead, I also worked with the Communications and Legal teams on how to better and more faithfully represent IBM’s work in AI to the public.

Original GANPaint technical demo

Existing Demo + Goals

Our team was asked to redesign an original tech demo built by the research team. While functional, the tech demo did not provide contextual information, and its interaction design was not immediately legible to lay audiences. The new demo needed to cater to developers, tech enthusiasts, and science professionals from various backgrounds. We therefore needed meet the following goals —

Visitors need to have a meaningful demo interaction within one minute of visiting the page

Visitors should need no prior knowledge of AI should to enjoy this demo to what’s going on and what significance is.

Visitors can find a clear path to learn more and to get involved

Design Process

These goals helped identify two tracks of work for the project — the overall narrative experience and interaction design of the “paint with GAN” module. To start, I wrote a first draft of the experience’s copy based on the research team’s publications, and workshopped that copy with the scientists for clarity and accuracy. With a rough draft in place, I created an initial mobile-first layout to provide a sense of pacing and hierarchy.

I then collaborated with a visual designer and developer to iterate on the layout and interaction patterns used, with the aim of improving responsiveness, affordances, and discoverability of features. Within a week, we built a coded, responsive, content-rich prototype that we could use to test with users.

Evolution of content, layout, features, and images for GANPaint demo site

User Testing + Iteration

With our coded prototype, we ran evaluative testing sessions with a handful of participants. Our aim was to gauge the discoverability of content, whether our interaction patterns were intuitive, and what overall messages people took from the experience. I drafted a testing protocol, which asked users to complete the following —

An unguided prototype review to examine what people were drawn to or confused by

A task analysis exercise to see if people could discover the ‘painting’ features and understand what they did

An interaction with an alternate layout as comparison

Final questions on overall comprehension and impressions

Demonstration of the GANPaint demo painting interaction

Progressive disclosure of more detailed information about GANs and the research team’s efforts

Within our participant group, we identified two general subgroups — those who focused on learning what a GAN is, and those who wanted to understand the applications and ramifications of image-generation technologies overall. We incorporated the feedback of these two groups to the painting module’s final interaction design, as well as the content and design of the information panel below it.

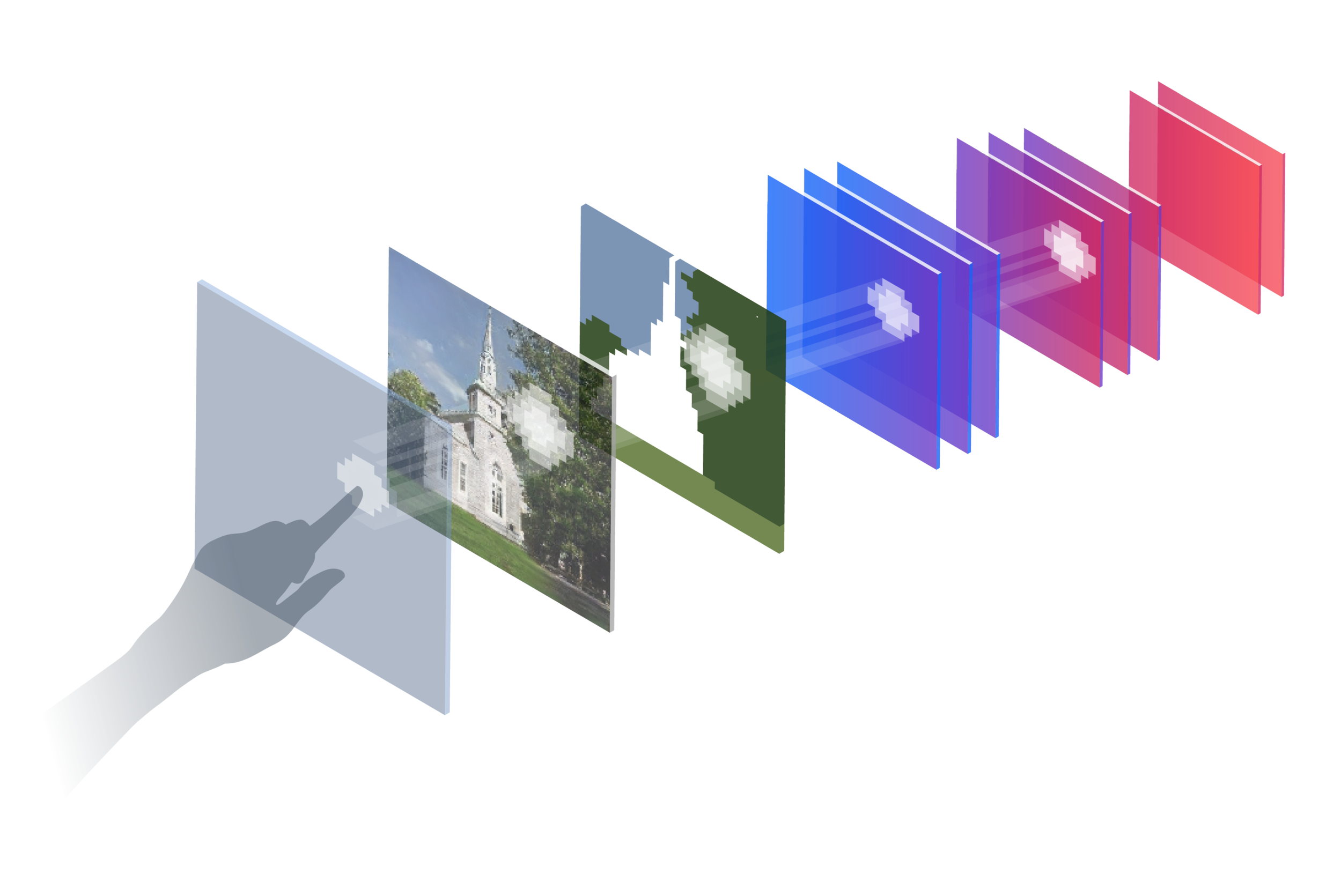

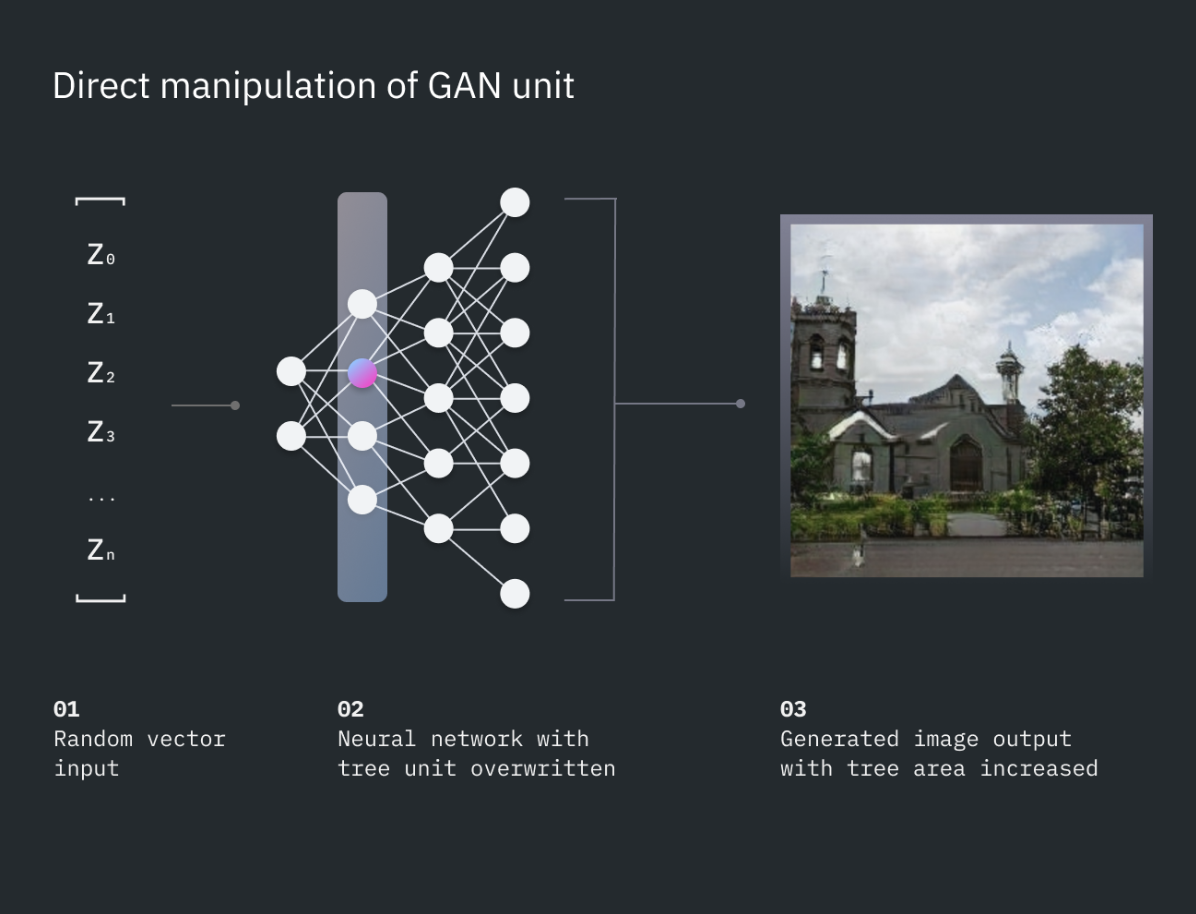

As finishing touches, I worked with the research team to develop two new graphic designs for the experience — one that conveyed how the “painting” process affected the image and underlying GAN, and another that outlined how direct manipulation of a GAN works. I then collaborated with a motion designer to turn the first image into an animation for the top of the page.

Final Demo Design + Launch

After reviewing the completed experience with Legal, Communications, and the Research team, we launched the new GANPaint experience in May 2019, four weeks after project kickoff.

Final Design layout for GANPaint web experience.